The concept of a dynamic focal plane is becoming increasingly important in the field of computational imaging, virtual reality, and advanced optics. By dynamically adjusting the focal plane in response to user movement or visual input, systems can more realistically simulate depth changes, mimicking how the human eye focuses on different distances. This technique improves image realism, reduces visual fatigue, and enhances immersion in 3D applications. In this article, we explore how a dynamic focal plane works, its technical foundation, and its practical applications.

Understanding the Dynamic Focal Plane in Depth Simulation

The term dynamic focal plane refers to the real-time adjustment of the image focus position, allowing systems to replicate depth perception in a way that closely aligns with human visual behavior.

The Human Eye as a Model

The human eye uses the ciliary muscle to adjust the focal length, changing the shape of the lens to focus on objects at different distances. This biological function, called accommodation, inspired the development of dynamic focal plane systems. When emulated in display technologies, this technique can:

- – Reduce vergence-accommodation conflict, a common cause of eye strain in VR

- – Simulate multi-depth scenes by shifting focus points

Core Parameters in Focal Plane Adjustment

To effectively simulate depth using a dynamic focal plane, several parameters must be managed:

- – Focal length range: The system must be capable of adjusting between at least 20 cm to infinity, which mimics human accommodation.

- – Frame rate: Updates to the focal plane must occur at speeds of 60–120 Hz to maintain smooth transitions.

- – Latency: System response to eye movement or positional tracking should be below 20 milliseconds to avoid perceptual lag.

Implementing Depth Simulation with a Dynamic Focal Plane

Creating realistic depth cues using a dynamic focal plane requires both hardware and software integration. Below is an outline of current techniques.

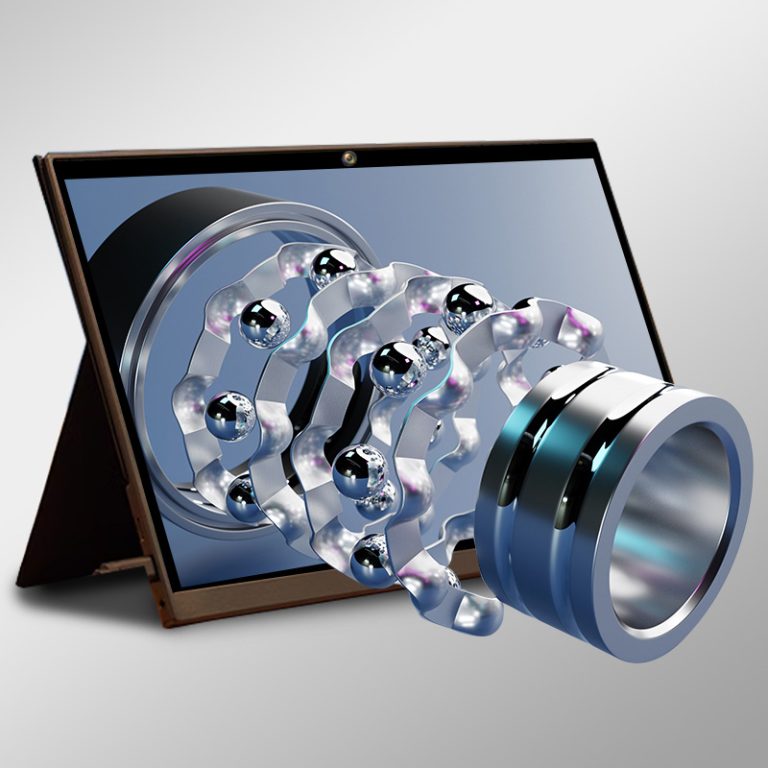

Hardware-Based Approaches

- Tunable Lenses – Use liquid crystal or electrowetting technology to adjust the optical focus electronically.

- Varifocal Displays – Adjust the physical screen or lens position according to eye tracking data.

- Light Field Displays – Generate multiple focal planes simultaneously, enabling dynamic selection based on gaze.

Each of these systems enables real-time focal shifts, aligning perceived image depth with user focus.

Software Algorithms and Predictive Models

- Gaze Tracking Integration – Eye trackers capture where the user is looking, allowing dynamic focal planes to respond accordingly.

- Depth Mapping – Real-time depth estimation using stereo cameras or LiDAR allows content to shift focus intelligently.

- Predictive Focus Models – AI models anticipate user gaze and pre-adjust the focal plane, reducing latency effects.

These software layers must operate with high precision, often requiring processing accuracy within ±1 diopter to ensure comfort and realism.

Advantages and Application Areas

Why Use a Dynamic Focal Plane?

The adoption of dynamic focal plane systems offers several key benefits:

- – Improved Visual Comfort: Reduces eye strain, especially during prolonged VR use

- – Enhanced Immersion: Mimics real-world visual behavior, increasing realism

- – Adaptive Focus: Customizes visual experience based on each user’s interaction

Practical Applications Across Industries

- Virtual Reality (VR) and Augmented Reality (AR): Provides natural focal transitions in head-mounted displays, supporting longer usage times.

- Medical Imaging: Allows radiologists to explore multi-layer scans with dynamic focus adjustment.

- Defense and Aerospace: Enables pilots and operators to simulate real-world depth in training environments.

In fact, headsets like the Varjo XR-4 and Meta’s experimental prototypes already use dynamic focal plane techniques to enhance depth perception.

Future Directions and Research Opportunities

As the dynamic focal plane approach matures, several research areas are gaining attention:

- – Retinal Projection: Direct projection onto the retina with adjustable focal depth.

- – Neural Focus Control: Using brain-computer interfaces to control the dynamic focal plane without eye tracking.

Continued improvements in AI, optics, and sensor fusion are expected to reduce power consumption and system size, enabling more compact and consumer-ready implementations.

Conclusion

Simulating depth changes using a dynamic focal plane is a promising direction in next-generation display and imaging technologies. By replicating natural focus behavior, it addresses key limitations in 3D rendering and immersive interaction. Whether through tunable lenses, varifocal displays, or intelligent software prediction, the dynamic focal plane enhances how users experience digital depth. As systems become more responsive and adaptive, the boundary between virtual and reality will continue to dissolve.